docker部署hbase

系统为 centos7

docker 安装的版本为 20.10.9

一,docker安装

卸载系统仓库自带的docker

1 | sudo yum remove docker \ |

有三种方式来安装docker

- 更新官方仓库后yum安装

- 手动下载文件安装

- 脚本文件安装

目前准备使用第三种方式来安装

1 | curl -fsSL https://get.docker.com -o get-docker.sh && sh get-docker.sh |

二,docker-compose来实现单节点容器的编排

1 | curl -L https://get.daocloud.io/docker/compose/releases/download/1.25.1/docker-compose-`uname -s`-`uname -m` -o /usr/local/bin/docker-compose |

/usr/local/bin/docker-compose -f docker-compose-distributed-local.yml up -d

1 | resourcemanager 8088 |

三,使用docker stack来实现

1.创建swarm的网络保证各个节点都能平等的被编排

docker swarm init 初始化一个swarm 的网络

docker swarm join-token worker 显示 加入的token

docker swarm join-token manager 显示加入的token

目前使用下面加入manager,因为worker节点不能显示集群的细节

在其他服务器上输入 下面类似的内容加入节点

docker swarm join –token SWMTKN-1-0w6kjxx0j4epupf6r844dxpzoyxikrxqqgrqg319vqfg0y1gw7-31q237m76pyii2g0pw1c8nmtu 192.168.31.136:2377

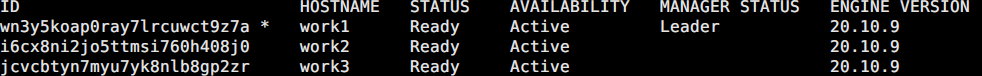

2.docker node ls 查看目前的网络

docker network create –driver overlay –scope swarm hbase

docker stack deploy –compose-file docker-compose-v3.yml hdp

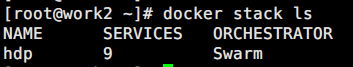

docker stack ls

docker stack down hadoop

docker network create –driver overlay –attachable –subnet 10.11.0.0/24 sg-hadoop

Creating service hdp_nodemanager

Creating service hdp_hbase-master

/opt/hbase-1.2.6/bin/hbase-daemon.sh restart regionserver

echo -e “hbase-region2\nhbase-region” > /etc/hbase/regionservers

docker service update –with-registry-auth

需要追加 –with-registry-auth

docker exec -t 70f261259630 netstat -an | grep LISTEN | grep 50070

1 | version: '3' |

将自动创建service

3.使用docker stack ls来查看创建stack

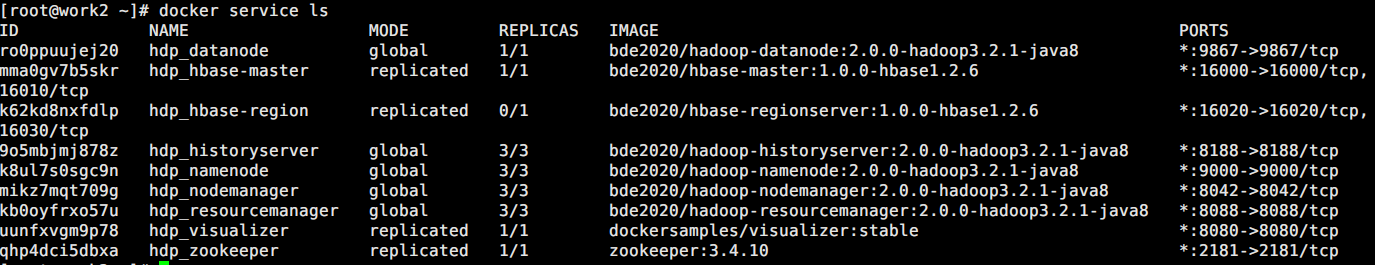

4.使用docker service ls来查看service列表

5.使用yml中visualizer组件来显示部署的情况

| 组件 | 节点 | 默认端口 | 配置 | 用途说明 |

|---|---|---|---|---|

| HDFS | DateNode | 50010 | dfs.datanode.address | datanode服务端口,用于数据传输 |

| HDFS | DateNode | 50075 | dfs.datanode.http.address | http服务的端口 |

| HDFS | DateNode | 50475 | dfs.datanode.https.address | http服务的端口 |

| HDFS | DateNode | 50020 | dfs.datanode.ipc.address | ipc服务的端口 |

| HDFS | NameNode | 50070 | dfs.namenode.http-address | http服务的端口 |

| HDFS | NameNode | 50470 | dfs.namenode.https-address | https服务的端口 |

| HDFS | NameNode | 8020 | fs.defaultFS | 接收Client连接的RPC端口,用于获取文件系统metadata信息。 |

| HDFS | journalnode | 8485 | dfs.journalnode.rpc-address | RPC服务 |

| HDFS | journalnode | 8480 | dfs.journalnode.http-address | HTTP服务 |

| HDFS | ZKFC | 8019 | dfs.ha.zkfc.port | ZooKeeper FailoverController,用于NN HA |

| YARN | ResourceManage | 8032 | yarn.resourcemanager.address | RM的applications manager(ASM)端口 |

| YARN | ResourceManage | 8030 | yarn.resourcemanager.scheduler.address | scheduler组件的IPC端口 |

| YARN | ResourceManage | 8031 | yarn.resourcemanager.resource-tracker.address | IPC |

| YARN | ResourceManage | 8033 | yarn.resourcemanager.admin.address | IPC |

| YARN | ResourceManage | 8088 | yarn.resourcemanager.webapp.address | http服务端口 |

| YARN | NodeManager | 8040 | yarn.nodemanager.localizer.address | localizer IPC |

| YARN | NodeManager | 8042 | yarn.nodemanager.webapp.address | http服务端口 |

| YARN | NodeManager | 8041 | yarn.nodemanager.address | NM中container manager的端口 |

| YARN | JobHistory Server | 10020 | mapreduce.jobhistory.address | IPC |

| YARN | JobHistory Server | 19888 | mapreduce.jobhistory.webapp.address | http服务端口 |

| HBase | Master | 60000 | hbase.master.port | IPC (主节点(HBase 主节点和任何备份的 HBase 主节点) |

| HBase | Master | 60010 | hbase.master.info.port | http服务端口 (主节点(HBase 主节点和备份 HBase 主节点如果有)) |

| HBase | RegionServer | 60020 | hbase.regionserver.port | IPC( 所有从节点) |

| HBase | RegionServer | 60030 | hbase.regionserver.info.port | http服务端口 |

| HBase | HQuorumPeer | 2181 | hbase.zookeeper.property.clientPort | HBase-managed ZK mode,使用独立的ZooKeeper集群则不会启用该端口。 |

| HBase | HQuorumPeer | 2888 | hbase.zookeeper.peerport | HBase-managed ZK mode,使用独立的ZooKeeper集群则不会启用该端口。 |

| HBase | HQuorumPeer | 3888 | hbase.zookeeper.leaderport | HBase-managed ZK mode,使用独立的ZooKeeper集群则不会启用该端口。 |

| Hive | Metastore | 9085 | /etc/default/hive-metastore中export PORT= |

|

| Hive | HiveServer | 10000 | /etc/hive/conf/hive-env.sh中export HIVE_SERVER2_THRIFT_PORT= |

|

| ZooKeeper | Server | 2181 | /etc/zookeeper/conf/zoo.cfg中clientPort= |

来自 ZooKeeper 的 config 的属性zoo.cfg。客户端将连接的端口。 |

| ZooKeeper | Server | 2888 | /etc/zookeeper/conf/zoo.cfg中server.x=[hostname]:nnnnn[:nnnnn],标蓝部分 | follower用来连接到leader,只在leader上监听该端口 |

| ZooKeeper | Server | 3888 | /etc/zookeeper/conf/zoo.cfg中server.x=[hostname]:nnnnn[:nnnnn],标蓝部分 | ZooKeeper 对等点用于相互通信的端口。有关更多信息,请参见此处 |

十,遇到的坑

1.在hadoop配置的需要主要

配置url类似于 host:port 这种时 host 不能报考_ 等字符,否则会报 contain a valid host:port authority 包含 _

2.使用docker stack 初始化的环境

所有的服务会有个stack名称的前缀,且不能修改

在集群中加入容器中后可以通过 服务名称就是 STACK_NAME 来调用也可以通过在yml中定义的services下面的节点名称来调用这个需要注意

初始化后出现所有的service会加前缀导致域名不能和访问

3.哈哈哈

1 | File /hbase/.tmp/hbase.version could only be written to 0 of the 1 minReplication nodes. There are 1 datanode(s) running and 1 node(s) are excluded in this operation |

4.配置出错导致服务绑定对应的ip

1 | java.io.IOException: Problem binding to hbase-region/10.0.4.107:16040 : Cannot assign requested address. To switch ports use the 'hbase.regionserver.port' configuration property. |