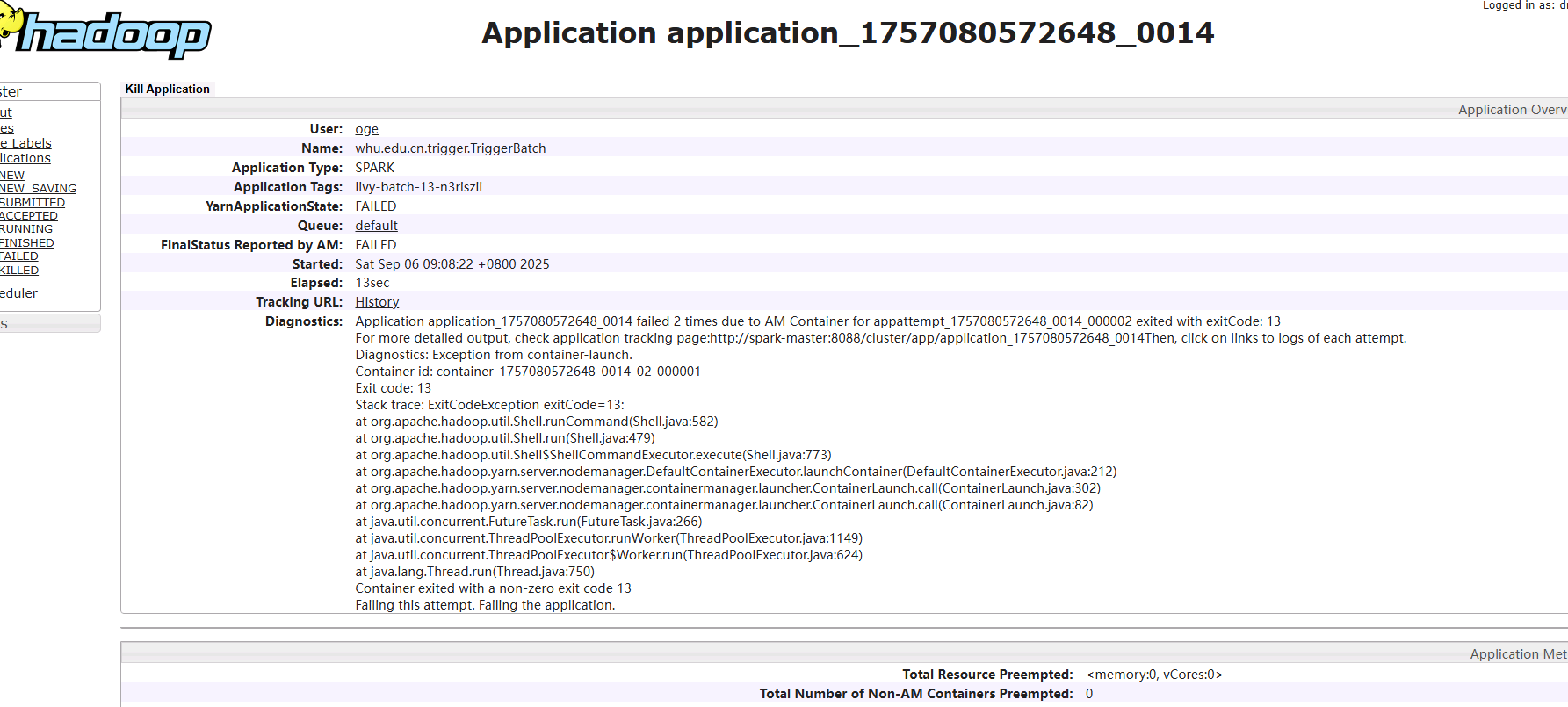

目前提交批处理任务都是通过 livy提交通过设置参数和 jar来执行,seesion 任务提交没问题,但是遇到提交 batch 任务一直出现Exit code: 13 问了一圈包括 ai都说是权限问题 验证很多遍,最后通过缩减参数最后发现是多了个参数

这个是 livy batch 提交的批处理任务现在执行失败,我通过 session 提交也可以执行成功

{ "args": [ " { \"0\":{ \"functionInvocationValue\":{ \"functionName\":\"Coverage.export\",\"arguments\":{ \"coverage\":{ \"functionInvocationValue\":{ \"functionName\":\"Coverage.addStyles\",\"arguments\":{ \"coverage\":{ \"functionInvocationValue\":{ \"functionName\":\"Coverage.aspect\",\"arguments\":{ \"coverage\":{ \"functionInvocationValue\":{ \"functionName\":\"Service.getCoverage\",\"arguments\":{ \"coverageID\":{ \"constantValue\":\"ASTGTM_N28E056\" } ,\"baseUrl\":{ \"constantValue\":\"http://localhost\" } ,\"productID\":{ \"constantValue\":\"ASTER_GDEM_DEM30\" } } } } ,\"radius\":{ \"constantValue\":1 } } } } ,\"min\":{ \"constantValue\":-1 } ,\"max\":{ \"constantValue\":1 } ,\"palette\":{ \"constantValue\":[\"#808080\",\"#949494\",\"#a9a9a9\",\"#bdbebd\",\"#d3d3d3\",\"#e9e9e9\"] } } } } } } } ,\"isBatch\":1 } ", "f950cff2-07c8-461a-9c24-9162d59e2666_1757072928459_0", "f950cff2-07c8-461a-9c24-9162d59e2666", "EPSG:4326", "1000", "result", "file_2025_09_05_19_48_47", "tif" ], "numExecutors": 3, "driverMemory": "2g", "executorMemory": "2g", "file": "local:computation_ogc.jar", "driverCores": 1, "executorCores": 4, "className": "TriggerBatch", "conf": { "spark.kryoserializer.buffer.max": "5g", "spark.driver.maxResultSize": "2g", "spark.executor.extraClassPath": "local://jars/*", "spark.driver.extraClassPath": "local:/jars/*", "spark.serializer": "org.apache.spark.serializer.KryoSerializer", "spark.executor.memoryOverhead": "2g" }

显示

Stack trace: ExitCodeException exitCode=13:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:582)

at org.apache.hadoop.util.Shell.run(Shell.java:479)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:773)

at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:212) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82) at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750) Container exited with a non-zero exit code 13 Failing this attempt.

我把参数手动提交可以执行成功 /data/server/spark-3.0.0/bin/spark-submit --verbose --class TriggerBatch --conf spark.executor.instances=2 --conf spark.executor.memory=2g --conf spark.executor.memoryOverhead=2g --conf spark.serializer=org.apache.spark.serializer.KryoSerializer --conf spark.driver.memory=8g --conf spark.yarn.tags=livy-batch-0-mqY0FpT9 --conf spark.driver.cores=2 --conf spark.yarn.submit.waitAppCompletion=false --conf spark.executor.extraClassPath=local:/jars/* --conf spark.executor.cores=2 --conf spark.driver.extraClassPath=local:/jars/* local:/computation_ogc.jar '{"0":{"functionInvocationValue":{"functionName":"Coverage.export","arguments":{"coverage":{"functionInvocationValue":{"functionName":"Coverage.addStyles","arguments":{"coverage":{"functionInvocationValue":{"functionName":"Coverage.aspect","arguments":{"coverage":{"functionInvocationValue":{"functionName":"Service.getCoverage","arguments":{"coverageID":{"constantValue":"ASTGTM_N28E056"},"baseUrl":{"constantValue":"http://localhost"},"productID":{"constantValue":"ASTER_GDEM_DEM30"}}}},"radius":{"constantValue":1}}}},"min":{"constantValue":-1},"max":{"constantValue":1},"palette":{"constantValue":["#808080","#949494","#a9a9a9","#bdbebd","#d3d3d3","#e9e9e9"]}}}}}}},"isBatch":1}'

查看/data/server/hadoop-2.7.3/logs/yarn-oge-resourcemanager-spark-master.log 日志显示如下

2025-09-05 19:48:51,036 INFO SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for oge/spark-master.example.com@EXAMPLE.COM (auth:KERBEROS) 2025-09-05 19:48:51,039 INFO SecurityLogger.org.apache.hadoop.security.authorize.ServiceAuthorizationManager: Authorization successful for oge/spark-master.example.com@EXAMPLE.COM (auth:KERBEROS) for protocol=interface org.apache.hadoop.yarn.api.ApplicationClientProtocolPB 2025-09-05 19:48:53,342 INFO SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for oge/spark-master.example.com@EXAMPLE.COM (auth:KERBEROS) 2025-09-05 19:48:53,348 INFO SecurityLogger.org.apache.hadoop.security.authorize.ServiceAuthorizationManager: Authorization successful for oge/spark-master.example.com@EXAMPLE.COM (auth:KERBEROS) for protocol=interface org.apache.hadoop.yarn.api.ApplicationClientProtocolPB 2025-09-05 19:48:53,363 INFO org.apache.hadoop.yarn.server.resourcemanager.ClientRMService: Allocated new applicationId: 13 2025-09-05 19:48:57,110 WARN org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: The specific max attempts: 0 for application: 13 is invalid, because it is out of the range [1, 2]. Use the global max attempts instead. 2025-09-05 19:48:57,111 INFO org.apache.hadoop.yarn.server.resourcemanager.ClientRMService: Application with id 13 submitted by user oge 2025-09-05 19:48:57,111 INFO org.apache.hadoop.yarn.server.resourcemanager.RMAuditLogger: USER=oge IP=192.200.34.42 OPERATION=Submit Application Request TARGET=ClientRMService RESULT=SUCCESS APPID=application_1757068896457_0013 2025-09-05 19:48:57,111 INFO org.apache.hadoop.yarn.server.resourcemanager.security.DelegationTokenRenewer: application_1757068896457_0013 found existing hdfs token Kind: HDFS_DELEGATION_TOKEN, Service: 192.200.34.42:9000, Ident: (HDFS_DELEGATION_TOKEN token 719 for oge) 2025-09-05 19:48:57,123 INFO org.apache.hadoop.yarn.server.resourcemanager.security.DelegationTokenRenewer: Renewed delegation-token= [Kind: HDFS_DELEGATION_TOKEN, Service: 192.200.34.42:9000, Ident: (HDFS_DELEGATION_TOKEN token 719 for oge);exp=1757159337121; apps=[application_1757068896457_0013]], for [application_1757068896457_0013] 2025-09-05 19:48:57,123 INFO org.apache.hadoop.yarn.server.resourcemanager.security.DelegationTokenRenewer: Renew Kind: HDFS_DELEGATION_TOKEN, Service: 192.200.34.42:9000, Ident: (HDFS_DELEGATION_TOKEN token 719 for oge);exp=1757159337121; apps=[application_1757068896457_0013] in 86399998 ms, appId = [application_1757068896457_0013] 2025-09-05 19:48:57,124 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: Storing application with id application_1757068896457_0013 2025-09-05 19:48:57,124 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: application_1757068896457_0013 State change from NEW to NEW_SAVING 2025-09-05 19:48:57,124 INFO org.apache.hadoop.yarn.server.resourcemanager.recovery.RMStateStore: Storing info for app: application_1757068896457_0013 2025-09-05 19:48:57,124 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: application_1757068896457_0013 State change from NEW_SAVING to SUBMITTED 2025-09-05 19:48:57,124 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: Application added - appId: application_1757068896457_0013 user: oge leaf-queue of parent: root #applications: 1 2025-09-05 19:48:57,124 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler: Accepted application application_1757068896457_0013 from user: oge, in queue: default 2025-09-05 19:48:57,124 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: application_1757068896457_0013 State change from SUBMITTED to ACCEPTED 2025-09-05 19:48:57,124 INFO org.apache.hadoop.yarn.server.resourcemanager.ApplicationMasterService: Registering app attempt : appattempt_1757068896457_0013_000001 2025-09-05 19:48:57,124 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000001 State change from NEW to SUBMITTED 2025-09-05 19:48:57,125 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: Application application_1757068896457_0013 from user: oge activated in queue: default 2025-09-05 19:48:57,125 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: Application added - appId: application_1757068896457_0013 user: org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue$User@22aac5cf, leaf-queue: default #user-pending-applications: 0 #user-active-applications: 1 #queue-pending-applications: 0 #queue-active-applications: 1 2025-09-05 19:48:57,125 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler: Added Application Attempt appattempt_1757068896457_0013_000001 to scheduler from user oge in queue default 2025-09-05 19:48:57,128 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000001 State change from SUBMITTED to SCHEDULED 2025-09-05 19:48:57,200 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_1757068896457_0013_01_000001 Container Transitioned from NEW to ALLOCATED 2025-09-05 19:48:57,200 INFO org.apache.hadoop.yarn.server.resourcemanager.RMAuditLogger: USER=oge OPERATION=AM Allocated Container TARGET=SchedulerApp RESULT=SUCCESS APPID=application_1757068896457_0013 CONTAINERID=container_1757068896457_0013_01_000001 2025-09-05 19:48:57,201 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerNode: Assigned container container_1757068896457_0013_01_000001 of capacity <memory:3072, vCores:1> on host spark-slave1.example.com:34599, which has 1 containers, <memory:3072, vCores:1> used and <memory:406528, vCores:89> available after allocation 2025-09-05 19:48:57,201 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: assignedContainer application attempt=appattempt_1757068896457_0013_000001 container=Container: [ContainerId: container_1757068896457_0013_01_000001, NodeId: spark-slave1.example.com:34599, NodeHttpAddress: spark-slave1.example.com:8042, Resource: <memory:3072, vCores:1>, Priority: 0, Token: null, ] queue=default: capacity=1.0, absoluteCapacity=1.0, usedResources=<memory:0, vCores:0>, usedCapacity=0.0, absoluteUsedCapacity=0.0, numApps=1, numContainers=0 clusterResource=<memory:1228800, vCores:270> type=OFF_SWITCH 2025-09-05 19:48:57,201 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: Re-sorting assigned queue: root.default stats: default: capacity=1.0, absoluteCapacity=1.0, usedResources=<memory:3072, vCores:1>, usedCapacity=0.0025, absoluteUsedCapacity=0.0025, numApps=1, numContainers=1 2025-09-05 19:48:57,201 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: assignedContainer queue=root usedCapacity=0.0025 absoluteUsedCapacity=0.0025 used=<memory:3072, vCores:1> cluster=<memory:1228800, vCores:270> 2025-09-05 19:48:57,202 INFO org.apache.hadoop.yarn.server.resourcemanager.security.NMTokenSecretManagerInRM: Sending NMToken for nodeId : spark-slave1.example.com:34599 for container : container_1757068896457_0013_01_000001 2025-09-05 19:48:57,203 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_1757068896457_0013_01_000001 Container Transitioned from ALLOCATED to ACQUIRED 2025-09-05 19:48:57,203 INFO org.apache.hadoop.yarn.server.resourcemanager.security.NMTokenSecretManagerInRM: Clear node set for appattempt_1757068896457_0013_000001 2025-09-05 19:48:57,203 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: Storing attempt: AppId: application_1757068896457_0013 AttemptId: appattempt_1757068896457_0013_000001 MasterContainer: Container: [ContainerId: container_1757068896457_0013_01_000001, NodeId: spark-slave1.example.com:34599, NodeHttpAddress: spark-slave1.example.com:8042, Resource: <memory:3072, vCores:1>, Priority: 0, Token: Token { kind: ContainerToken, service: 192.200.34.182:34599 }, ] 2025-09-05 19:48:57,203 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000001 State change from SCHEDULED to ALLOCATED_SAVING 2025-09-05 19:48:57,203 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000001 State change from ALLOCATED_SAVING to ALLOCATED 2025-09-05 19:48:57,204 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Launching masterappattempt_1757068896457_0013_000001 2025-09-05 19:48:57,206 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Setting up container Container: [ContainerId: container_1757068896457_0013_01_000001, NodeId: spark-slave1.example.com:34599, NodeHttpAddress: spark-slave1.example.com:8042, Resource: <memory:3072, vCores:1>, Priority: 0, Token: Token { kind: ContainerToken, service: 192.200.34.182:34599 }, ] for AM appattempt_1757068896457_0013_000001 2025-09-05 19:48:57,206 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Command to launch container container_1757068896457_0013_01_000001 : {{JAVA_HOME}}/bin/java,-server,-Xmx2048m,-Djava.io.tmpdir={{PWD}}/tmp,-Dspark.yarn.app.container.log.dir=<LOG_DIR>,org.apache.spark.deploy.yarn.ApplicationMaster,--class,'TriggerBatch',--jar,local:/computation_ogc.jar,--arg,' { \"0\": { \"functionInvocationValue\": { \"functionName\":\"Coverage.export\",\"arguments\": { \"coverage\": { \"functionInvocationValue\": { \"functionName\":\"Coverage.addStyles\",\"arguments\": { \"coverage\": { \"functionInvocationValue\": { \"functionName\":\"Coverage.aspect\",\"arguments\": { \"coverage\": { \"functionInvocationValue\": { \"functionName\":\"Service.getCoverage\",\"arguments\": { \"coverageID\": { \"constantValue\":\"ASTGTM_N28E056\" } ,\"baseUrl\": { \"constantValue\":\"http://localhost\" } ,\"productID\": { \"constantValue\":\"ASTER_GDEM_DEM30\" } } } } ,\"radius\": { \"constantValue\":1 } } } } ,\"min\": { \"constantValue\":-1 } ,\"max\": { \"constantValue\":1 } ,\"palette\": { \"constantValue\":[\"#808080\",\"#949494\",\"#a9a9a9\",\"#bdbebd\",\"#d3d3d3\",\"#e9e9e9\"] } } } } } } } ,\"isBatch\":1 } ',--arg,'f950cff2-07c8-461a-9c24-9162d59e2666_1757072928459_0',--arg,'f950cff2-07c8-461a-9c24-9162d59e2666',--arg,'EPSG:4326',--arg,'1000',--arg,'result',--arg,'file_2025_09_05_19_48_47',--arg,'tif',--properties-file,{{PWD}}/__spark_conf__/__spark_conf__.properties,--dist-cache-conf,{{PWD}}/__spark_conf__/__spark_dist_cache__.properties,1>,<LOG_DIR>/stdout,2>,<LOG_DIR>/stderr 2025-09-05 19:48:57,206 INFO org.apache.hadoop.yarn.server.resourcemanager.security.AMRMTokenSecretManager: Create AMRMToken for ApplicationAttempt: appattempt_1757068896457_0013_000001 2025-09-05 19:48:57,206 INFO org.apache.hadoop.yarn.server.resourcemanager.security.AMRMTokenSecretManager: Creating password for appattempt_1757068896457_0013_000001 2025-09-05 19:48:57,215 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Done launching container Container: [ContainerId: container_1757068896457_0013_01_000001, NodeId: spark-slave1.example.com:34599, NodeHttpAddress: spark-slave1.example.com:8042, Resource: <memory:3072, vCores:1>, Priority: 0, Token: Token { kind: ContainerToken, service: 192.200.34.182:34599 }, ] for AM appattempt_1757068896457_0013_000001 2025-09-05 19:48:57,215 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000001 State change from ALLOCATED to LAUNCHED 2025-09-05 19:48:58,199 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_1757068896457_0013_01_000001 Container Transitioned from ACQUIRED to RUNNING 2025-09-05 19:49:02,233 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_1757068896457_0013_01_000001 Container Transitioned from RUNNING to COMPLETED 2025-09-05 19:49:02,234 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.common.fica.FiCaSchedulerApp: Completed container: container_1757068896457_0013_01_000001 in state: COMPLETED event:FINISHED 2025-09-05 19:49:02,234 INFO org.apache.hadoop.yarn.server.resourcemanager.RMAuditLogger: USER=oge OPERATION=AM Released Container TARGET=SchedulerApp RESULT=SUCCESS APPID=application_1757068896457_0013 CONTAINERID=container_1757068896457_0013_01_000001 2025-09-05 19:49:02,234 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerNode: Released container container_1757068896457_0013_01_000001 of capacity <memory:3072, vCores:1> on host spark-slave1.example.com:34599, which currently has 0 containers, <memory:0, vCores:0> used and <memory:409600, vCores:90> available, release resources=true 2025-09-05 19:49:02,234 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: default used=<memory:0, vCores:0> numContainers=0 user=oge user-resources=<memory:0, vCores:0> 2025-09-05 19:49:02,234 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: completedContainer container=Container: [ContainerId: container_1757068896457_0013_01_000001, NodeId: spark-slave1.example.com:34599, NodeHttpAddress: spark-slave1.example.com:8042, Resource: <memory:3072, vCores:1>, Priority: 0, Token: Token { kind: ContainerToken, service: 192.200.34.182:34599 }, ] queue=default: capacity=1.0, absoluteCapacity=1.0, usedResources=<memory:0, vCores:0>, usedCapacity=0.0, absoluteUsedCapacity=0.0, numApps=1, numContainers=0 cluster=<memory:1228800, vCores:270> 2025-09-05 19:49:02,234 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: completedContainer queue=root usedCapacity=0.0 absoluteUsedCapacity=0.0 used=<memory:0, vCores:0> cluster=<memory:1228800, vCores:270> 2025-09-05 19:49:02,234 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: Re-sorting completed queue: root.default stats: default: capacity=1.0, absoluteCapacity=1.0, usedResources=<memory:0, vCores:0>, usedCapacity=0.0, absoluteUsedCapacity=0.0, numApps=1, numContainers=0 2025-09-05 19:49:02,234 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler: Application attempt appattempt_1757068896457_0013_000001 released container container_1757068896457_0013_01_000001 on node: host: spark-slave1.example.com:34599 #containers=0 available=<memory:409600, vCores:90> used=<memory:0, vCores:0> with event: FINISHED 2025-09-05 19:49:02,234 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: Updating application attempt appattempt_1757068896457_0013_000001 with final state: FAILED, and exit status: 13 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000001 State change from LAUNCHED to FINAL_SAVING 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.ApplicationMasterService: Unregistering app attempt : appattempt_1757068896457_0013_000001 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.security.AMRMTokenSecretManager: Application finished, removing password for appattempt_1757068896457_0013_000001 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000001 State change from FINAL_SAVING to FAILED 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: The number of failed attempts is 1. The max attempts is 2 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.ApplicationMasterService: Registering app attempt : appattempt_1757068896457_0013_000002 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000002 State change from NEW to SUBMITTED 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler: Application Attempt appattempt_1757068896457_0013_000001 is done. finalState=FAILED 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.AppSchedulingInfo: Application application_1757068896457_0013 requests cleared 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: Application removed - appId: application_1757068896457_0013 user: oge queue: default #user-pending-applications: 0 #user-active-applications: 0 #queue-pending-applications: 0 #queue-active-applications: 0 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: Application application_1757068896457_0013 from user: oge activated in queue: default 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: Application added - appId: application_1757068896457_0013 user: org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue$User@5b887260, leaf-queue: default #user-pending-applications: 0 #user-active-applications: 1 #queue-pending-applications: 0 #queue-active-applications: 1 2025-09-05 19:49:02,235 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler: Added Application Attempt appattempt_1757068896457_0013_000002 to scheduler from user oge in queue default 2025-09-05 19:49:02,236 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000002 State change from SUBMITTED to SCHEDULED 2025-09-05 19:49:02,701 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_1757068896457_0013_02_000001 Container Transitioned from NEW to ALLOCATED 2025-09-05 19:49:02,701 INFO org.apache.hadoop.yarn.server.resourcemanager.RMAuditLogger: USER=oge OPERATION=AM Allocated Container TARGET=SchedulerApp RESULT=SUCCESS APPID=application_1757068896457_0013 CONTAINERID=container_1757068896457_0013_02_000001 2025-09-05 19:49:02,701 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerNode: Assigned container container_1757068896457_0013_02_000001 of capacity <memory:3072, vCores:1> on host spark-master.example.com:45563, which has 1 containers, <memory:3072, vCores:1> used and <memory:406528, vCores:89> available after allocation 2025-09-05 19:49:02,701 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: assignedContainer application attempt=appattempt_1757068896457_0013_000002 container=Container: [ContainerId: container_1757068896457_0013_02_000001, NodeId: spark-master.example.com:45563, NodeHttpAddress: spark-master.example.com:8042, Resource: <memory:3072, vCores:1>, Priority: 0, Token: null, ] queue=default: capacity=1.0, absoluteCapacity=1.0, usedResources=<memory:0, vCores:0>, usedCapacity=0.0, absoluteUsedCapacity=0.0, numApps=1, numContainers=0 clusterResource=<memory:1228800, vCores:270> type=OFF_SWITCH 2025-09-05 19:49:02,701 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: Re-sorting assigned queue: root.default stats: default: capacity=1.0, absoluteCapacity=1.0, usedResources=<memory:3072, vCores:1>, usedCapacity=0.0025, absoluteUsedCapacity=0.0025, numApps=1, numContainers=1 2025-09-05 19:49:02,701 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: assignedContainer queue=root usedCapacity=0.0025 absoluteUsedCapacity=0.0025 used=<memory:3072, vCores:1> cluster=<memory:1228800, vCores:270> 2025-09-05 19:49:02,701 INFO org.apache.hadoop.yarn.server.resourcemanager.security.NMTokenSecretManagerInRM: Sending NMToken for nodeId : spark-master.example.com:45563 for container : container_1757068896457_0013_02_000001 2025-09-05 19:49:02,703 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_1757068896457_0013_02_000001 Container Transitioned from ALLOCATED to ACQUIRED 2025-09-05 19:49:02,703 INFO org.apache.hadoop.yarn.server.resourcemanager.security.NMTokenSecretManagerInRM: Clear node set for appattempt_1757068896457_0013_000002 2025-09-05 19:49:02,703 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: Storing attempt: AppId: application_1757068896457_0013 AttemptId: appattempt_1757068896457_0013_000002 MasterContainer: Container: [ContainerId: container_1757068896457_0013_02_000001, NodeId: spark-master.example.com:45563, NodeHttpAddress: spark-master.example.com:8042, Resource: <memory:3072, vCores:1>, Priority: 0, Token: Token { kind: ContainerToken, service: 192.200.34.42:45563 }, ] 2025-09-05 19:49:02,703 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000002 State change from SCHEDULED to ALLOCATED_SAVING 2025-09-05 19:49:02,703 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000002 State change from ALLOCATED_SAVING to ALLOCATED 2025-09-05 19:49:02,704 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Launching masterappattempt_1757068896457_0013_000002 2025-09-05 19:49:02,717 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Setting up container Container: [ContainerId: container_1757068896457_0013_02_000001, NodeId: spark-master.example.com:45563, NodeHttpAddress: spark-master.example.com:8042, Resource: <memory:3072, vCores:1>, Priority: 0, Token: Token { kind: ContainerToken, service: 192.200.34.42:45563 }, ] for AM appattempt_1757068896457_0013_000002 2025-09-05 19:49:02,717 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Command to launch container container_1757068896457_0013_02_000001 : {{JAVA_HOME}}/bin/java,-server,-Xmx2048m,-Djava.io.tmpdir={{PWD}}/tmp,-Dspark.yarn.app.container.log.dir=<LOG_DIR>,org.apache.spark.deploy.yarn.ApplicationMaster,--class,'TriggerBatch',--jar,local:/computation_ogc.jar,--arg,' { \"0\": { \"functionInvocationValue\": { \"functionName\":\"Coverage.export\",\"arguments\": { \"coverage\": { \"functionInvocationValue\": { \"functionName\":\"Coverage.addStyles\",\"arguments\": { \"coverage\": { \"functionInvocationValue\": { \"functionName\":\"Coverage.aspect\",\"arguments\": { \"coverage\": { \"functionInvocationValue\": { \"functionName\":\"Service.getCoverage\",\"arguments\": { \"coverageID\": { \"constantValue\":\"ASTGTM_N28E056\" } ,\"baseUrl\": { \"constantValue\":\"http://localhost\" } ,\"productID\": { \"constantValue\":\"ASTER_GDEM_DEM30\" } } } } ,\"radius\": { \"constantValue\":1 } } } } ,\"min\": { \"constantValue\":-1 } ,\"max\": { \"constantValue\":1 } ,\"palette\": { \"constantValue\":[\"#808080\",\"#949494\",\"#a9a9a9\",\"#bdbebd\",\"#d3d3d3\",\"#e9e9e9\"] } } } } } } } ,\"isBatch\":1 } ',--arg,'f950cff2-07c8-461a-9c24-9162d59e2666_1757072928459_0',--arg,'f950cff2-07c8-461a-9c24-9162d59e2666',--arg,'EPSG:4326',--arg,'1000',--arg,'result',--arg,'file_2025_09_05_19_48_47',--arg,'tif',--properties-file,{{PWD}}/__spark_conf__/__spark_conf__.properties,--dist-cache-conf,{{PWD}}/__spark_conf__/__spark_dist_cache__.properties,1>,<LOG_DIR>/stdout,2>,<LOG_DIR>/stderr 2025-09-05 19:49:02,717 INFO org.apache.hadoop.yarn.server.resourcemanager.security.AMRMTokenSecretManager: Create AMRMToken for ApplicationAttempt: appattempt_1757068896457_0013_000002 2025-09-05 19:49:02,717 INFO org.apache.hadoop.yarn.server.resourcemanager.security.AMRMTokenSecretManager: Creating password for appattempt_1757068896457_0013_000002 2025-09-05 19:49:02,726 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Done launching container Container: [ContainerId: container_1757068896457_0013_02_000001, NodeId: spark-master.example.com:45563, NodeHttpAddress: spark-master.example.com:8042, Resource: <memory:3072, vCores:1>, Priority: 0, Token: Token { kind: ContainerToken, service: 192.200.34.42:45563 }, ] for AM appattempt_1757068896457_0013_000002 2025-09-05 19:49:02,726 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000002 State change from ALLOCATED to LAUNCHED 2025-09-05 19:49:03,701 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_1757068896457_0013_02_000001 Container Transitioned from ACQUIRED to RUNNING 2025-09-05 19:49:07,673 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_1757068896457_0013_02_000001 Container Transitioned from RUNNING to COMPLETED 2025-09-05 19:49:07,673 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.common.fica.FiCaSchedulerApp: Completed container: container_1757068896457_0013_02_000001 in state: COMPLETED event:FINISHED 2025-09-05 19:49:07,673 INFO org.apache.hadoop.yarn.server.resourcemanager.RMAuditLogger: USER=oge OPERATION=AM Released Container TARGET=SchedulerApp RESULT=SUCCESS APPID=application_1757068896457_0013 CONTAINERID=container_1757068896457_0013_02_000001 2025-09-05 19:49:07,673 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerNode: Released container container_1757068896457_0013_02_000001 of capacity <memory:3072, vCores:1> on host spark-master.example.com:45563, which currently has 0 containers, <memory:0, vCores:0> used and <memory:409600, vCores:90> available, release resources=true 2025-09-05 19:49:07,673 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: default used=<memory:0, vCores:0> numContainers=0 user=oge user-resources=<memory:0, vCores:0> 2025-09-05 19:49:07,673 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: completedContainer container=Container: [ContainerId: container_1757068896457_0013_02_000001, NodeId: spark-master.example.com:45563, NodeHttpAddress: spark-master.example.com:8042, Resource: <memory:3072, vCores:1>, Priority: 0, Token: Token { kind: ContainerToken, service: 192.200.34.42:45563 }, ] queue=default: capacity=1.0, absoluteCapacity=1.0, usedResources=<memory:0, vCores:0>, usedCapacity=0.0, absoluteUsedCapacity=0.0, numApps=1, numContainers=0 cluster=<memory:1228800, vCores:270> 2025-09-05 19:49:07,673 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: completedContainer queue=root usedCapacity=0.0 absoluteUsedCapacity=0.0 used=<memory:0, vCores:0> cluster=<memory:1228800, vCores:270> 2025-09-05 19:49:07,673 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: Re-sorting completed queue: root.default stats: default: capacity=1.0, absoluteCapacity=1.0, usedResources=<memory:0, vCores:0>, usedCapacity=0.0, absoluteUsedCapacity=0.0, numApps=1, numContainers=0 2025-09-05 19:49:07,673 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler: Application attempt appattempt_1757068896457_0013_000002 released container container_1757068896457_0013_02_000001 on node: host: spark-master.example.com:45563 #containers=0 available=<memory:409600, vCores:90> used=<memory:0, vCores:0> with event: FINISHED 2025-09-05 19:49:07,673 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: Updating application attempt appattempt_1757068896457_0013_000002 with final state: FAILED, and exit status: 13 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000002 State change from LAUNCHED to FINAL_SAVING 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.ApplicationMasterService: Unregistering app attempt : appattempt_1757068896457_0013_000002 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.security.AMRMTokenSecretManager: Application finished, removing password for appattempt_1757068896457_0013_000002 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1757068896457_0013_000002 State change from FINAL_SAVING to FAILED 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: The number of failed attempts is 2. The max attempts is 2 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: Updating application application_1757068896457_0013 with final state: FAILED 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: application_1757068896457_0013 State change from ACCEPTED to FINAL_SAVING 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.recovery.RMStateStore: Updating info for app: application_1757068896457_0013 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler: Application Attempt appattempt_1757068896457_0013_000002 is done. finalState=FAILED 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.AppSchedulingInfo: Application application_1757068896457_0013 requests cleared 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: Application removed - appId: application_1757068896457_0013 user: oge queue: default #user-pending-applications: 0 #user-active-applications: 0 #queue-pending-applications: 0 #queue-active-applications: 0 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: Application application_1757068896457_0013 failed 2 times due to AM Container for appattempt_1757068896457_0013_000002 exited with exitCode: 13 For more detailed output, check application tracking page:http://spark-master:8088/cluster/app/application_1757068896457_0013Then, click on links to logs of each attempt. Diagnostics: Exception from container-launch. Container id: container_1757068896457_0013_02_000001 Exit code: 13 Stack trace: ExitCodeException exitCode=13: at org.apache.hadoop.util.Shell.runCommand(Shell.java:582) at org.apache.hadoop.util.Shell.run(Shell.java:479) at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:773) at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:212) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:750) Container exited with a non-zero exit code 13 Failing this attempt. Failing the application. 2025-09-05 19:49:07,674 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: application_1757068896457_0013 State change from FINAL_SAVING to FAILED 2025-09-05 19:49:07,675 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: Application removed - appId: application_1757068896457_0013 user: oge leaf-queue of parent: root #applications: 0 2025-09-05 19:49:07,675 WARN org.apache.hadoop.yarn.server.resourcemanager.RMAuditLogger: USER=oge OPERATION=Application Finished - Failed TARGET=RMAppManager RESULT=FAILURE DESCRIPTION=App failed with state: FAILED PERMISSIONS=Application application_1757068896457_0013 failed 2 times due to AM Container for appattempt_1757068896457_0013_000002 exited with exitCode: 13 For more detailed output, check application tracking page:http://spark-master:8088/cluster/app/application_1757068896457_0013Then, click on links to logs of each attempt. Diagnostics: Exception from container-launch. Container id: container_1757068896457_0013_02_000001 Exit code: 13 Stack trace: ExitCodeException exitCode=13: at org.apache.hadoop.util.Shell.runCommand(Shell.java:582) at org.apache.hadoop.util.Shell.run(Shell.java:479) at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:773) at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:212) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:750) Container exited with a non-zero exit code 13 Failing this attempt. Failing the application. APPID=application_1757068896457_0013 2025-09-05 19:49:07,676 INFO org.apache.hadoop.yarn.server.resourcemanager.RMAppManager$ApplicationSummary: appId=application_1757068896457_0013,name=TriggerBatch,user=oge,queue=default,state=FAILED,trackingUrl=http://spark-master:8088/cluster/app/application_1757068896457_0013,appMasterHost=N/A,startTime=1757072937110,finishTime=1757072947674,finalStatus=FAILED,memorySeconds=30734,vcoreSeconds=9,preemptedAMContainers=0,preemptedNonAMContainers=0,preemptedResources=<memory:0\, vCores:0>,applicationType=SPARK 2025-09-05 19:49:11,054 INFO org.apache.hadoop.ipc.Server: IPC Server handler 35 on 8032, call org.apache.hadoop.yarn.api.ApplicationClientProtocolPB.getContainerReport from 192.200.34.42:44582 Call#44 Retry#0 org.apache.hadoop.yarn.exceptions.ContainerNotFoundException: Container with id 'container_1757068896457_0013_02_000001' doesn't exist in RM. at org.apache.hadoop.yarn.server.resourcemanager.ClientRMService.getContainerReport(ClientRMService.java:464) at org.apache.hadoop.yarn.api.impl.pb.service.ApplicationClientProtocolPBServiceImpl.getContainerReport(ApplicationClientProtocolPBServiceImpl.java:394) at org.apache.hadoop.yarn.proto.ApplicationClientProtocol$ApplicationClientProtocolService$2.callBlockingMethod(ApplicationClientProtocol.java:445) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2049) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2045) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2043) 权限信息如下 [oge@spark-master ~]$ cd /data/data/hadoop_data/ dfs/ nm-local-dir/ [oge@spark-master ~]$ cd /data/data/hadoop_data/nm-local-dir/ filecache/ nmPrivate/ usercache/ [oge@spark-master ~]$ cd /data/data/hadoop_data/nm-local-dir/usercache/ [oge@spark-master usercache]$ ll total 0 drwxr-x--- 4 oge oge 39 Sep 5 18:45 oge [oge@spark-master usercache]$ ll oge total 4.0K drwx--x--- 2 oge oge 6 Sep 5 19:30 appcache drwx--x--- 72 oge oge 4.0K Sep 5 19:30 filecache [oge@spark-master usercache]$ ll oge/appcache/ total 0 [oge@spark-master usercache]$ ll oge/filecache/ total 0 drwxr-xr-x 3 oge oge 24 Sep 5 18:45 10

一直以为是权限问题排查了半天分别试了如下办法

增加 yarn debug 的日志

查看application_1757068896457_0013 的本地日志没有设置的 30 分钟回收,使用 hdfs -logs 也没有

因为是 json 的问题

因为是权限的 jar包

namei /computation_ogc.jar

ls -l /computation_ogc.jar

ls -l /jars 都没问题

最后是在 ai建议下用了个空参数来试

{ "file": "local:/computation_ogc.jar", "className": "TriggerBatch", "args": ["test"], "numExecutors": 1, "driverMemory": "1g", "executorMemory": "1g", "driverCores": 1, "executorCores": 1 }

发现是”spark.kryoserializer.buffer.max”: “5g”, 导致的,由于任务没在 yarn 上怎么运行没有显示具体的错误在 resourcemanager 和 nodemanage 上都看不出来